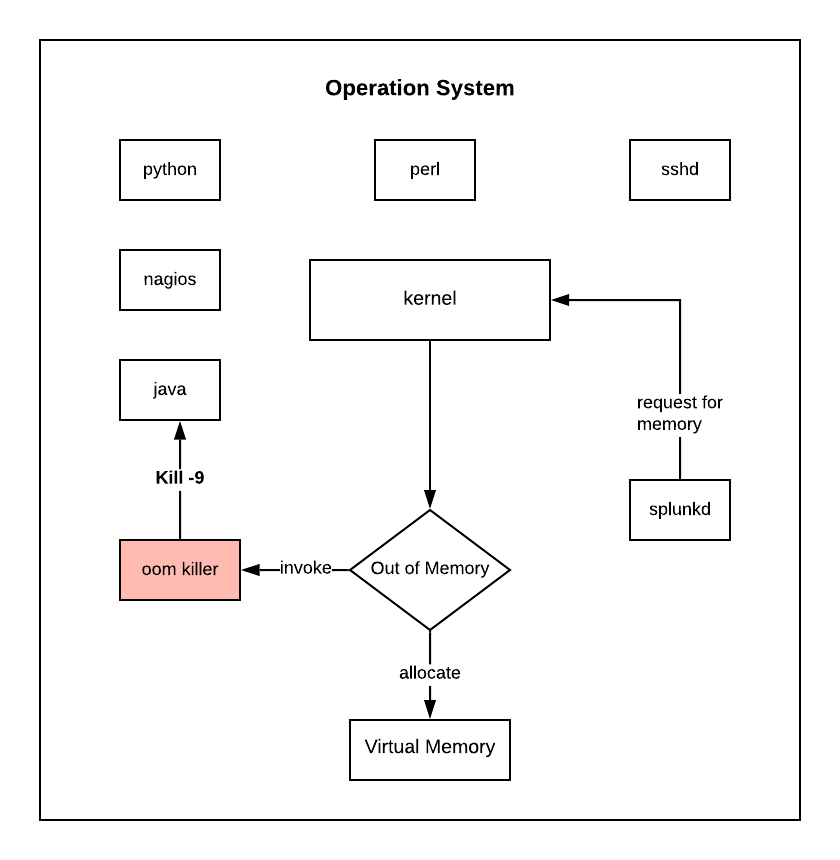

The purpose of this article is to explain how a kernel can invoke (out-of-memory) oom killer to kill a process abruptly which might be a java or node process of your application and what steps we can take to resolve such issues.

- When OOM Killer is invoked by kernel?

- Where to see if oom killer killed your process?

- How to resolve oom killer issues?

When OOM Killer is invoked by kernel?

In Linux based systems, every process has a oom score, you can find oom score for a process like below.

-bash-4.1$ ps -ef | grep /bin/java | grep -v grep \

| awk '{print $2}' | xargs -i cat /proc/{}/oom_score

881

-bash-4.1$ ps -ef | grep 'splunkd pid' | grep -v grep \

| awk '{print $2}' | xargs -i cat /proc/{}/oom_score

0

-bash-4.1$ ps -ef | grep /usr/sbin/sshd | grep -v grep \

| awk '{print $2}' | xargs -i cat /proc/{}/oom_score

0

As you can see /bin/java process has an 881 oom score, whereas splunkd and sshd has a 0 oom score

If splunkd asked for more memory and if there is no enough virtual memory, kernel invokes oom killer on any process which

has a high oom score, in this case, its /bin/java. Here /bin/java is the main backend application and splunkd is the Splunk

forwarder to forward the logs of /bin/java process.

Where to see if oom killer killed your process?

You can check system logs at /var/log/messages whenever the oom killer is invoked. You will see similar logs like below

Oct 8 11:12:20 app-44276360-12-70800876 kernel: splunkd invoked oom-killer: gfp_mask=0x201da, order=0, oom_adj=0,

oom_score_adj=0

...

Oct 8 11:12:20 app-44276360-12-70800876 kernel: Out of memory: Kill process 27364 (java) score 933 or sacrifice child

If you are using any Java service wrapper

Then you might see below logs in wrapper logs

STATUS | wrapper | 2017/10/08 11:12:20 | JVM received a signal SIGKILL (9).

STATUS | wrapper | 2017/10/08 11:12:20 | JVM process is gone.

ERROR | wrapper | 2017/10/08 11:12:20 | JVM exited unexpectedly.

STATUS | wrapper | 2017/10/08 11:12:24 | Launching a JVM...

Note: If /bin/java is asking for more memory, and if no virtual memory is available, then oom killer kills /bin/java

process. Now the issue here is we will not be able to find any memory leaks happening at the JVM level if an oom-killer kills it.

How to resolve oom killer issues?

Adjust oom score

One way to resolve this is by having a low oom score for the application process.

For example — I used below java program, which requests memory in a loop.

import java.util.Scanner;

public class DemoLimit {

private static Scanner scanner = new Scanner( System.in );

public static void main(String[] args) {

java.util.List<int[]> l = new java.util.ArrayList();

try {

for (int i = 10000; i < 100000; i++) {

if(i == 10007) {

String input = scanner.nextLine();

}

System.out.println(i);

l.add(new int[100000000]);

}

} catch (Throwable t) {

t.printStackTrace();

}

}

}

Similarly I used another C program, which also requests memory in a loop

#include <stdio.h>

#include <stdlib.h>

int main (void) {

int n = 0;

while (1) {

if (malloc(1<<20) == NULL) {

printf("malloc failure after %d MiB\n", n);

return 0;

}

printf ("got %d MiB\n", ++n);

if(n == 10000) {

getchar();

}

}

}

View rawdemo3.c hosted with ❤ by GitHub

Before adjusting the oom score for the java process. When demo3 (with same oom score) process asked memory, oom killed java process.

➜ ~ java -Xmx4g DemoLimit > /dev/null &

[1] 19668

Now, if we look at the score of above process 19668, below are the oom scores

➜ ~ more /proc/19668/oom_adj

0

➜ ~ more /proc/19668/oom_score_adj

0

Now run the C program

➜ ~ gcc demo3.c -o demo3.out

➜ ~ ./demo3.out > /dev/null &

[2] 19713

After some time, oom killed 19668 PID of java.

➜ ~

[1] + 19668 killed java -Xmx4g DemoLimit > /dev/null

if you check /var/log/messages you can see that demo3.out invoked oom-killer and kernel killed java process

Oct 17 22:54:52 kernel: [202078.985459] demo3.out invoked oom-killer:

gfp_mask=0x14280ca(GFP_HIGHUSER_MOVABLE|__GFP_ZERO), nodemask=0, order=0, oom_score_adj=0

Oct 17 22:54:52 kernel: [202078.985460] demo3.out cpuset=/ mems_allowed=0

...

Oct 17 22:54:52 kernel: [202078.985642] Out of memory: Kill process 19668 (java) score 779 or sacrifice child

Oct 17 22:54:52 kernel: [202078.985653] Killed process 19668 (java) total-vm:4919756kB, anon-rss:3146852kB,

file-rss:0kB, shmem-rss:0kB

After adjusting the oom score for the java process. When demo3 process asked memory, oom killed demo3

➜ ~ java -Xmx4g DemoLimit > /dev/null &

[1] 19793

➜ ~

[1] + 19793 suspended (tty input) java -Xmx4g DemoLimit > /dev/null

Adjusted the score for above java process 19793 like below.

➜ ~ sudo echo -17 > /proc/19793/oom_adj

➜ ~ sudo echo -1000 > /proc/19793/oom_score_adj

➜ ~ more /proc/19793/oom_adj

-17

➜ ~ more /proc/19793/oom_score_adj

-1000

Now run demo3.c program

➜ ~ ./demo3.out > /dev/null &

[2] 19834

After some time, you will see that 19834 got killed by an oom killer.

➜ ~

[2] 19834 killed ./demo3.out > /dev/null

if you check /var/log/messages on demo3 overcommit, demo3 process was selected to be killed instead of java.

Oct 17 23:04:31 kernel: [202657.851735] demo3.out invoked oom-killer:

gfp_mask=0x14280ca(GFP_HIGHUSER_MOVABLE|__GFP_ZERO), nodemask=0, order=0, oom_score_adj=0

Oct 17 23:04:31 kernel: [202657.851736] demo3.out cpuset=/ mems_allowed=0

...

Oct 17 23:04:31 kernel: [202657.851954] Out of memory: Kill process 19834 (demo3.out) score 93 or sacrifice child

Oct 17 23:04:31 kernel: [202657.851958] Killed process 19834 (demo3.out) total-vm:64397816kB, anon-rss:250256kB,

file-rss:0kB, shmem-rss:0kB

System impact perspective, we need to make sure oom scores are given based on priority — for example, sshd oom score is

-17, we can see java oom score as -16 & splunk oom score as -15.

Can we adjust the overcommit behavior of the kernel?

Linux kernel provides a setting /proc/sys/vm/overcommit_memory which is by default 0 . When it is 0 on the overcommit kill

process with a low oom score, when it is 1 always overcommit, 2 on overcommit more than swap + x% of physical RAM do not

kill but give errors on memory allocation. You can refer to kernel overcommit documentation

Let’s see in action by having different settings and figuring out the best option.

Below is the default behavior —

➜ ~ more /proc/sys/vm/overcommit_memory

0

➜ ~ more /proc/sys/vm/overcommit_ratio

50

Out of 3.2 GB available, from 1 to 3 GB Xmx, java died of itself due to out of memory. When Xmx is 4GB,

the kernel could not allocate and kills the java process.

➜ ~ java -Xmx1g Demo

java.lang.OutOfMemoryError: Java heap space

at Demo.main(Demo.java:6)

➜ ~ java -Xmx2g Demo

java.lang.OutOfMemoryError: Java heap space

at Demo.main(Demo.java:6)

➜ ~ java -Xmx3g Demo

java.lang.OutOfMemoryError: Java heap space

at Demo.main(Demo.java:6)

➜ ~ java -Xmx4g Demo

[1] 8956 killed java -Xmx4g Demo

Changed the overcommit default settings like below

➜ ~ more /proc/sys/vm/overcommit_memory

2

➜ ~ more /proc/sys/vm/overcommit_ratio

80

Java was not able to allocate more than 800m

➜ ~ java -Xmx800m Demo

java.lang.OutOfMemoryError: Java heap space

at Demo.main(Demo.java:6)

➜ ~ java -Xmx900m Demo

Error occurred during initialization of VM

Could not reserve enough space for object heap

Could not create the Java virtual machine.

➜ ~ java -Xmx1g Demo

Error occurred during initialization of VM

Could not reserve enough space for object heap

Could not create the Java virtual machine.

The above settings did not help. How about adding swap space?

Follow the below steps to create swap space.

## Be as root

-bash-4.1$ sudo bash

## check if you don’t have any swapfile created before

# swapon -s

Filename Type Size Used Priority

## check if fallocate is present

# which fallocate

/usr/bin/fallocate

## Create a swap file. I kept 2G here.

# fallocate -l 2G /swapfile

## Verify if swapfile got created

# ls -lh /swapfile

-rw-r--r-- 1 root root 2.0G Oct 17 18:29 /swapfile

## Give permissions should be 600

# chmod 600 /swapfile

## Making sure permissions got applied

# ls -lh /swapfile

-rw------- 1 root root 2.0G Oct 17 18:29 /swapfile

## Make sure we have mkswap

# which mkswap

/sbin/mkswap

## create the swapfile

# mkswap /swapfile

mkswap: /swapfile: warning: don't erase bootbits sectors

on whole disk. Use -f to force.

Setting up swapspace version 1, size = 2097148 KiB

no label, UUID=e6b2850d-47dc-4b0c-8832-c1ea9f177a07

## enable swap

# swapon /swapfile

# swapon -s

Filename Type Size Used Priority

/swapfile file 2097144 0 -1

Now we have swap space, let’s try the below settings

➜ ~ free -m

total used free shared buff/cache available

Mem: 3949 471 3342 18 134 3284

Swap: 1897 0 1897

If we go with below overcommit settings with above swap space

➜ ~ more /proc/sys/vm/overcommit_memory

2

➜ ~ more /proc/sys/vm/overcommit_ratio

80

With 3.2GB rss+ 1.8 swap, for 3GB JVM is not allocatable.

➜ ~ java -Xmx1g Demo

java.lang.OutOfMemoryError: Java heap space

at Demo.main(Demo.java:6)

➜ ~ java -Xmx2g Demo

java.lang.OutOfMemoryError: Java heap space

at Demo.main(Demo.java:6)

➜ ~ java -Xmx3g Demo

Error occurred during initialization of VM

Could not reserve enough space for object heap

Could not create the Java virtual machine.

How about going with default overcommit settings with swap space.

➜ ~ more /proc/sys/vm/overcommit_memory

0

➜ ~ more /proc/sys/vm/overcommit_ratio

50

With 3.2GB rss + 1.8 swap = 5 GB, regardless of what defined in Xmx, java died by itself.

➜ ~ java -Xmx1g Demo

java.lang.OutOfMemoryError: Java heap space

at Demo.main(Demo.java:6)

➜ ~ java -Xmx4g Demo

java.lang.OutOfMemoryError: Java heap space

at Demo.main(Demo.java:6)

➜ ~ java -Xmx8g Demo

java.lang.OutOfMemoryError: Java heap space

at Demo.main(Demo.java:6)

➜ ~ java -Xmx100g Demo

java.lang.OutOfMemoryError: Java heap space

at Demo.main(Demo.java:6)

With default overcommit settings and having swap space, we avoided oom-killer for the java process, and now, by having -XX:+HeapDumpOnOutOfMemoryError

option at the JVM level, if it crashes, it will generate a heap dump; we can further analyze it to find the cause.

I hope this gave some idea on debugging when an application is getting killed abruptly by kernel issuing oom-killer.

– RC

Comments